LambdaSim: your model as a service

by Marco Bonvini on Sunday March 26, 2017

Sometimes we face technical challenges like predicting the behaviour of a car when we brake at 60 mph. We spend a lot of time writing equations, testing assumptions and building a model that represents reality in a meaningful and useful way. The end product, the model, is like an artwork. It’s beautiful to the eyes of the “artist” who conceived it. An enigma for anyone else. Wouldn’t be nice if we were able to share our creations (i.e., the models) and let others use them and produce results with few clicks? In an era where everything is a service, this would be called Model as a service. TL;DR Do you have a model? Would you’d like to share it with others and eventually monetize it? Have a look at LambdaSim.

The problem

Why people use Uber? Because they need to reach a certain place and Uber provides a convenient service that gets them where they want. Why people like GMail? Becuase it’s dead simple, nice looking and fast. Also, GMail works in your browser and it doesn’t requires an installation. What Uber and GMail have in common? They’re easy to use.

Engineering isn’t different. Engineers have questions they need to answer. “How thick should we make this wall? How big the battery should be to drive 100 miles?” Luckily, there are engineers, physicists, mathematicians, and software engineers busy writing models that answer all sort of questions. They just have to share their models… Unfortunately this mundane task is harder than it seems.

How to share a model

Ok, looks like there is a problem to solve. How can we share a model with an other person? And by other person I mean any person, not my colleague that uses the same tools I use, has a Ph.D. in physics and started hacking with linux in ‘99.

One could start by building an executable and share it with the client. Perfect. But, wait a minute, if we build an executable we have to support Windows, Mac OS and Linux. Also, how do we visualize the results? Our model is great for computing data but it lacks an user interface. Are we going to build a native user interface for every model that is shared with a client? By the way, how do we make sure our model is used according to the terms and conditions? How do we prevent people from stealing it? What if the client needs to run thousands of simulations? Who is going to tell the client that someone, not us, will have to manage a cluster of computers or a server running their simulations? Looks like that instead of making our client’s life easier we’re making it more difficult.

Maybe I was wrong. We should have a server and build an API around the model so everyone with the proper credentials can run simulations. Great, we can have users pay a monthly subscription and we’re done. We can also let users run thousands of simulations in parallel and dispatch them on our cluster of computers. But wait a moment, this looks like a lot of work. We’re not Google or Amazon. By using a server we avoid licensing problems but we have to make sure all our systems are updated and security patches are applied. We have to make sure our authentication system is sound. We have to implement a mechanism for scaling the number of computers running simulations and provision the right number such that there will be enough machines in case they are needed but not too many otherwise we loose money. By the way, we have to operate this 24/7 and what reliability can we claim? 99.9%? 99.99%?

As always there isn’t an ideal solution. We should look for solutions that are simple and convenient for end users while keeping the overhead for developers as small as possible. LambdaSim attempts to solve this problem.

Serverless architecture

Over the last few years a new trend appeared in the IT world, serverless computing. As described in Wikipedia, despite its name serverless computing still involves servers. The name “serverless computing” is used because the business or person that owns the system does not have to purchase, rent or provision servers or virtual machines for the back-end code to run on.

By deploying our models on a serverless computing infrastructure we won’t have to pay for servers when they aren’t processing requests. More importantly, the service provider will handle security updates, monitoring, and all the other administrative tasks required to operate a cloud infrastructure that scales from one to thousands or millions of requests.

The serverless architecture is a trend that has been increasingly adopted by the community and all major cloud service providers, Amazon, Google and Microsoft have their own version. For example Amazon Web Services (AWS) serverless product is called Lambda and is probably the most mature of all three.

Something I’d like to stress is that services like AWS Lambda are ready to use and they are part of these companies’ core business. What is the likelihood that a small/medium company can build a system that is better than Amazon’s or Google’s? As always by standing on the shoulders of giants we can look further and concentrate on more important things, like designing a model with better interfaces that reduces the number of parameters required to get results.

LambdaSim: how it works

How does LambdaSim make deploying and sharing a simulation model easy?

LambdaSim is implemented on top of AWS Lambda. This means no servers to manage and ability to scale depending on our needs. The system uses AWS Lambda for two reasons. First AWS is a de facto industry standard and second it supports Python (Google’s cloud functions support only Javascript).

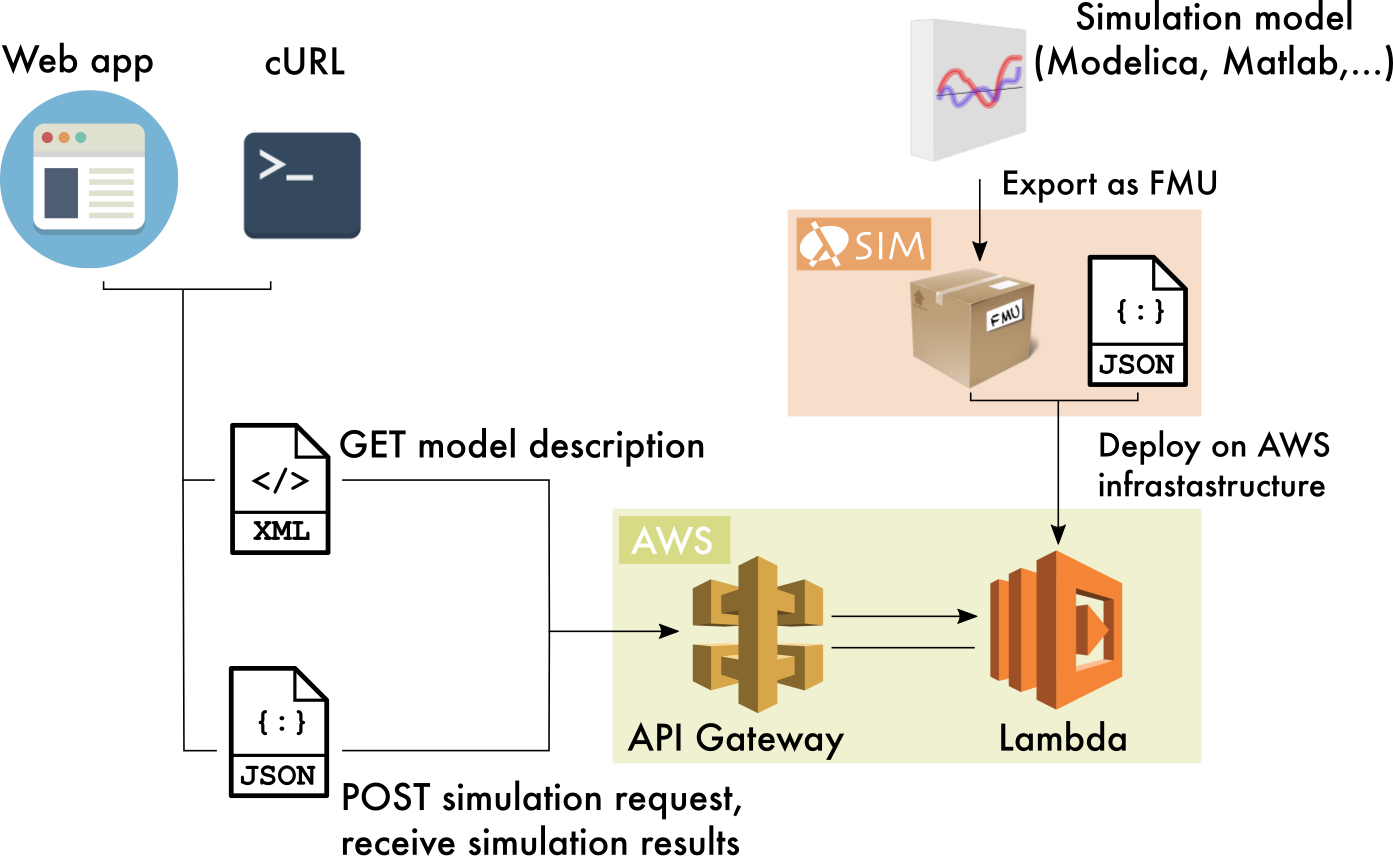

The following diagram shows how LambdaSim works.

It all starts in the upper right corner. One builds a simulation models using either Modelica or Matlab. Such a model is exported using a standard called FMI (Functional Mockup Interface) that generates a binary file called FMU (Functional Mockup Unit).

LambdaSim takes the binary FMU file together with a JSON configuration and automatically builds a series of AWS services that will run simulations upon requests from a RESTful API.

LambdaSim mainly uses two AWS services

- Lambda, the service that operates on demand servers that run simulations and return metadata associated to the model,

- Apigateway, the service that exposes the server via a public REST API.

If you’re not comfortable making your model available to everyone, Apigateway provides several options to restrict access, define usage tiers and limits. You can even let people pay for using your API (see AWS marketplace).

For convenience LambdaSim comes with its own web app. The app allows to browse and interact with APIs created by LambdaSim. The following GIF shows how to load an API, run a simulation and plot the results.

Make your own dashboard

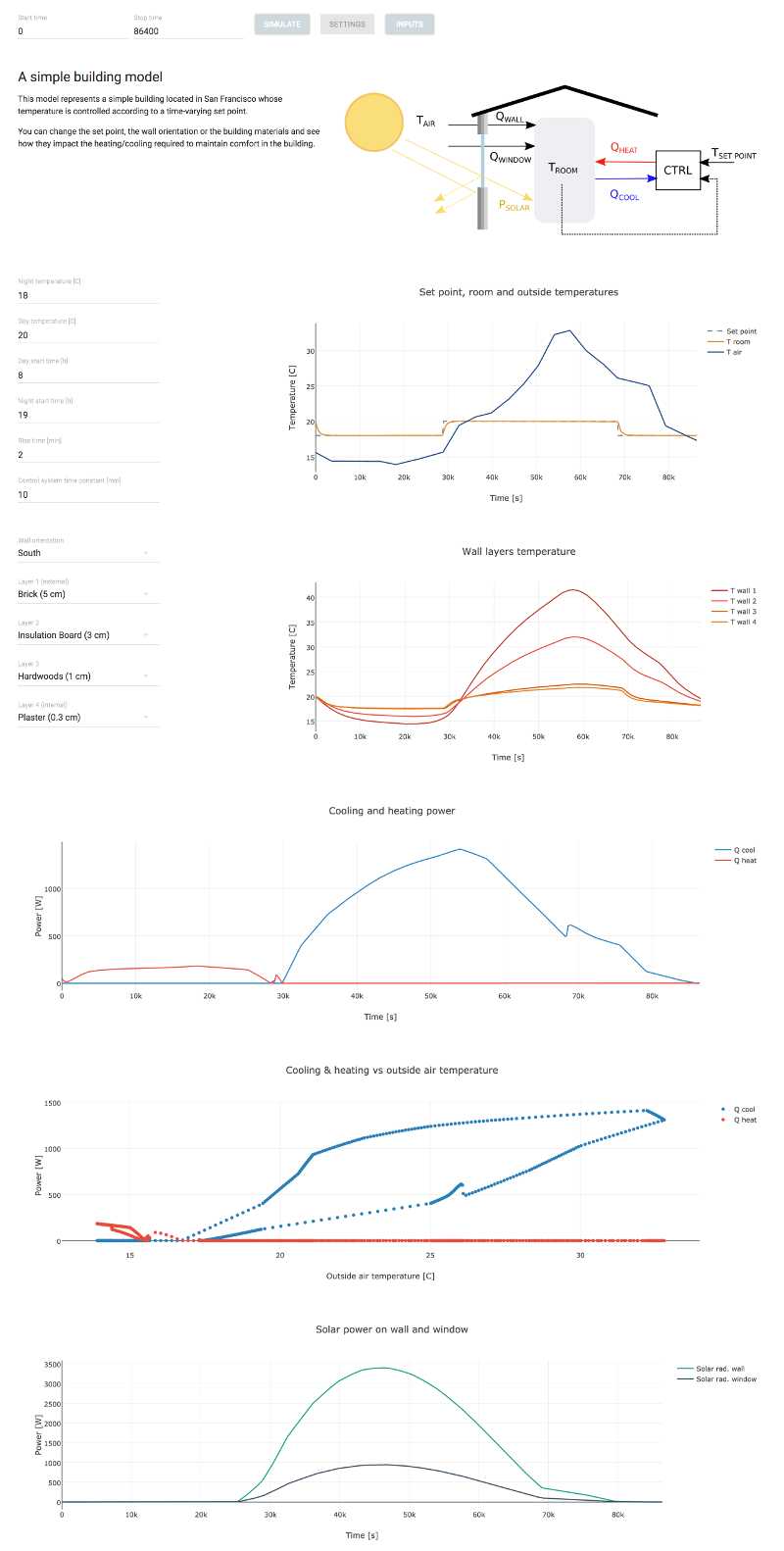

To make the system more user-friendy (both for developers and users) LambdaSim allows to create and customize your own dashboard using a JSON file.

This greatly simplifies the process of creating a nice user interface and therefore making models more digestible to users. Also, given that building a dashboard with LambdaSim is relatively easy, it’s more likely that model developers will provide a dashboard and not just the model.

The following image shows a dashboard that is automatically created using this JSON file.

Examples

In this section I hope to convince you that sharing a model using LamdaSim is simple and you should try it out. Just click on the following links to see the magic… Please note that if no one uses the model AWS freezes the API, so the first time you load it could take few seconds. After this initialization phase latencies are in the order of tens to hundreds of milli seconds.

The first example allows to simulate a simple First Order Model.

The second example is a bit more complete, here you can simulate a small Building Energy Model. This example comes with a dashboard that has been customized using the following JSON file.

The source code and the examples presented in this section are available on github at mbonvini/LambdaSim.

Conclusions

What started as an experiment for checking out some latest AWS technologies ended up becoming an interesting project. At the very beginning I wasn’t even sure it was possible to squeeze all the requriements (PyFMI, FMILib, Assimulo, Sundials, numpy, scipy, etc.) needed to run simulations in the limited environment provided by AWS Lambda. But looks like it’s possible!

I really hope you will find LambdaSim useful. I encourage you to look at the source code in the repository and use it to share some great models.

I’d like to thank Modelon for the tools they build and share with the Modelica and FMI community, without them this wouldn’t have been possible.